CUDA debugging utilities.

More...

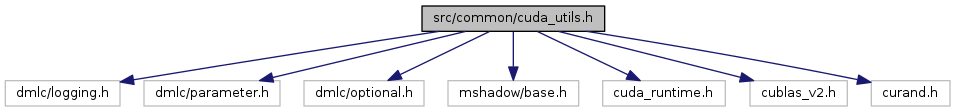

#include <dmlc/logging.h>

#include <dmlc/parameter.h>

#include <dmlc/optional.h>

#include <mshadow/base.h>

#include <cuda_runtime.h>

#include <cublas_v2.h>

#include <curand.h>

Go to the source code of this file.

CUDA debugging utilities.

| #define CHECK_CUDA_ERROR |

( |

|

msg | ) |

|

Value:{ \

cudaError_t e = cudaGetLastError(); \

CHECK_EQ(e, cudaSuccess) << (msg) << " CUDA: " << cudaGetErrorString(e); \

}

Check CUDA error.

- Parameters

-

| msg | Message to print if an error occured. |

| #define CUBLAS_CALL |

( |

|

func | ) |

|

Value:{ \

cublasStatus_t e = (func); \

CHECK_EQ(e, CUBLAS_STATUS_SUCCESS) \

}

const char * CublasGetErrorString(cublasStatus_t error)

Get string representation of cuBLAS errors.

Definition: cuda_utils.h:64

Protected cuBLAS call.

- Parameters

-

It checks for cuBLAS errors after invocation of the expression.

| #define CUDA_CALL |

( |

|

func | ) |

|

Value:{ \

cudaError_t e = (func); \

CHECK(e == cudaSuccess || e == cudaErrorCudartUnloading) \

<< "CUDA: " << cudaGetErrorString(e); \

}

Protected CUDA call.

- Parameters

-

It checks for CUDA errors after invocation of the expression.

| #define CUDA_NOUNROLL _Pragma("nounroll") |

| #define CUDA_UNROLL _Pragma("unroll") |

| #define CURAND_CALL |

( |

|

func | ) |

|

Value:{ \

curandStatus_t e = (func); \

CHECK_EQ(e, CURAND_STATUS_SUCCESS) \

}

const char * CurandGetErrorString(curandStatus_t status)

Get string representation of cuRAND errors.

Definition: cuda_utils.h:124

Protected cuRAND call.

- Parameters

-

It checks for cuRAND errors after invocation of the expression.

| #define CUSOLVER_CALL |

( |

|

func | ) |

|

Value:{ \

cusolverStatus_t e = (func); \

CHECK_EQ(e, CUSOLVER_STATUS_SUCCESS) \

}

const char * CusolverGetErrorString(cusolverStatus_t error)

Get string representation of cuSOLVER errors.

Definition: cuda_utils.h:95

Protected cuSolver call.

- Parameters

-

It checks for cuSolver errors after invocation of the expression.

| #define MXNET_CUDA_ALLOW_TENSOR_CORE_DEFAULT true |

| int ComputeCapabilityMajor |

( |

int |

device_id | ) |

|

|

inline |

Determine major version number of the gpu's cuda compute architecture.

- Parameters

-

| device_id | The device index of the cuda-capable gpu of interest. |

- Returns

- the major version number of the gpu's cuda compute architecture.

| int ComputeCapabilityMinor |

( |

int |

device_id | ) |

|

|

inline |

Determine minor version number of the gpu's cuda compute architecture.

- Parameters

-

| device_id | The device index of the cuda-capable gpu of interest. |

- Returns

- the minor version number of the gpu's cuda compute architecture.

| bool GetEnvAllowTensorCore |

( |

| ) |

|

|

inline |

Returns global policy for TensorCore algo use.

- Returns

- whether to allow TensorCore algo (if not specified by the Operator locally).

| int SMArch |

( |

int |

device_id | ) |

|

|

inline |

Return the integer SM architecture (e.g. Volta = 70).

- Parameters

-

| device_id | The device index of the cuda-capable gpu of interest. |

- Returns

- the gpu's cuda compute architecture as an int.

| bool SupportsFloat16Compute |

( |

int |

device_id | ) |

|

|

inline |

Determine whether a cuda-capable gpu's architecture supports float16 math.

- Parameters

-

| device_id | The device index of the cuda-capable gpu of interest. |

- Returns

- whether the gpu's architecture supports float16 math.

| bool SupportsTensorCore |

( |

int |

device_id | ) |

|

|

inline |

Determine whether a cuda-capable gpu's architecture supports Tensor Core math.

- Parameters

-

| device_id | The device index of the cuda-capable gpu of interest. |

- Returns

- whether the gpu's architecture supports Tensor Core math.

1.8.11

1.8.11