|

mxnet

|

|

mxnet

|

Common CUDA utilities. More...

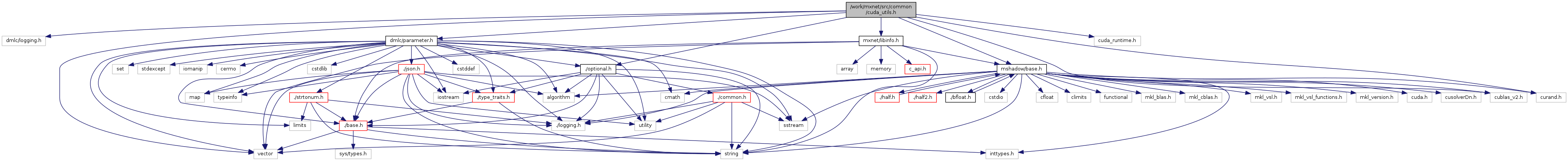

#include <dmlc/logging.h>#include <dmlc/parameter.h>#include <dmlc/optional.h>#include <mshadow/base.h>#include <mxnet/libinfo.h>#include <cuda_runtime.h>#include <cublas_v2.h>#include <curand.h>#include <vector>

Go to the source code of this file.

Classes | |

| struct | mxnet::common::cuda::CublasType< DType > |

| Converts between C++ datatypes and enums/constants needed by cuBLAS. More... | |

| struct | mxnet::common::cuda::CublasType< float > |

| struct | mxnet::common::cuda::CublasType< double > |

| struct | mxnet::common::cuda::CublasType< mshadow::half::half_t > |

| struct | mxnet::common::cuda::CublasType< uint8_t > |

| struct | mxnet::common::cuda::CublasType< int32_t > |

| class | mxnet::common::cuda::DeviceStore |

Namespaces | |

| mxnet | |

| namespace of mxnet | |

| mxnet::common | |

| mxnet::common::cuda | |

| common utils for cuda | |

Macros | |

| #define | QUOTE(x) #x |

| Macros/inlines to assist CLion to parse Cuda files (*.cu, *.cuh) More... | |

| #define | QUOTEVALUE(x) QUOTE(x) |

| #define | STATIC_ASSERT_CUDA_VERSION_GE(min_version) |

| #define | CHECK_CUDA_ERROR(msg) |

| When compiling a device function, check that the architecture is >= Kepler (3.0) Note that CUDA_ARCH is not defined outside of a device function. More... | |

| #define | CUDA_CALL(func) |

| Protected CUDA call. More... | |

| #define | CUBLAS_CALL(func) |

| Protected cuBLAS call. More... | |

| #define | CUSOLVER_CALL(func) |

| Protected cuSolver call. More... | |

| #define | CURAND_CALL(func) |

| Protected cuRAND call. More... | |

| #define | NVRTC_CALL(x) |

| Protected NVRTC call. More... | |

| #define | CUDA_DRIVER_CALL(func) |

| Protected CUDA driver call. More... | |

| #define | CUDA_UNROLL _Pragma("unroll") |

| #define | CUDA_NOUNROLL _Pragma("nounroll") |

| #define | MXNET_CUDA_ALLOW_TENSOR_CORE_DEFAULT true |

| #define | MXNET_CUDA_TENSOR_OP_MATH_ALLOW_CONVERSION_DEFAULT false |

Functions | |

| const char * | mxnet::common::cuda::CublasGetErrorString (cublasStatus_t error) |

| Get string representation of cuBLAS errors. More... | |

| const char * | mxnet::common::cuda::CusolverGetErrorString (cusolverStatus_t error) |

| Get string representation of cuSOLVER errors. More... | |

| const char * | mxnet::common::cuda::CurandGetErrorString (curandStatus_t status) |

| Get string representation of cuRAND errors. More... | |

| template<typename DType > | |

| DType __device__ | mxnet::common::cuda::CudaMax (DType a, DType b) |

| template<typename DType > | |

| DType __device__ | mxnet::common::cuda::CudaMin (DType a, DType b) |

| int | mxnet::common::cuda::get_load_type (size_t N) |

| Get the largest datatype suitable to read requested number of bytes. More... | |

| int | mxnet::common::cuda::get_rows_per_block (size_t row_size, int num_threads_per_block) |

| Determine how many rows in a 2D matrix should a block of threads handle based on the row size and the number of threads in a block. More... | |

| int | cudaAttributeLookup (int device_id, std::vector< int32_t > *cached_values, cudaDeviceAttr attr, const char *attr_name) |

Return an attribute GPU device_id. More... | |

| int | ComputeCapabilityMajor (int device_id) |

| Determine major version number of the gpu's cuda compute architecture. More... | |

| int | ComputeCapabilityMinor (int device_id) |

| Determine minor version number of the gpu's cuda compute architecture. More... | |

| int | SMArch (int device_id) |

| Return the integer SM architecture (e.g. Volta = 70). More... | |

| int | MultiprocessorCount (int device_id) |

Return the number of streaming multiprocessors of GPU device_id. More... | |

| int | MaxSharedMemoryPerMultiprocessor (int device_id) |

| Return the shared memory size in bytes of each of the GPU's streaming multiprocessors. More... | |

| bool | SupportsCooperativeLaunch (int device_id) |

Return whether the GPU device_id supports cooperative-group kernel launching. More... | |

| bool | SupportsFloat16Compute (int device_id) |

| Determine whether a cuda-capable gpu's architecture supports float16 math. Assume not if device_id is negative. More... | |

| bool | SupportsTensorCore (int device_id) |

| Determine whether a cuda-capable gpu's architecture supports Tensor Core math. Assume not if device_id is negative. More... | |

| bool | GetEnvAllowTensorCore () |

| Returns global policy for TensorCore algo use. More... | |

| bool | GetEnvAllowTensorCoreConversion () |

| Returns global policy for TensorCore implicit type casting. More... | |

Variables | |

| constexpr size_t | kMaxNumGpus = 64 |

| Maximum number of GPUs. More... | |

Common CUDA utilities.

Copyright (c) 2015 by Contributors

| #define CHECK_CUDA_ERROR | ( | msg | ) |

When compiling a device function, check that the architecture is >= Kepler (3.0) Note that CUDA_ARCH is not defined outside of a device function.

Check CUDA error.

| msg | Message to print if an error occured. |

| #define CUBLAS_CALL | ( | func | ) |

Protected cuBLAS call.

| func | Expression to call. |

It checks for cuBLAS errors after invocation of the expression.

| #define CUDA_CALL | ( | func | ) |

Protected CUDA call.

| func | Expression to call. |

It checks for CUDA errors after invocation of the expression.

| #define CUDA_DRIVER_CALL | ( | func | ) |

Protected CUDA driver call.

| func | Expression to call. |

It checks for CUDA driver errors after invocation of the expression.

| #define CUDA_NOUNROLL _Pragma("nounroll") |

| #define CUDA_UNROLL _Pragma("unroll") |

| #define CURAND_CALL | ( | func | ) |

Protected cuRAND call.

| func | Expression to call. |

It checks for cuRAND errors after invocation of the expression.

| #define CUSOLVER_CALL | ( | func | ) |

Protected cuSolver call.

| func | Expression to call. |

It checks for cuSolver errors after invocation of the expression.

| #define MXNET_CUDA_ALLOW_TENSOR_CORE_DEFAULT true |

| #define MXNET_CUDA_TENSOR_OP_MATH_ALLOW_CONVERSION_DEFAULT false |

| #define NVRTC_CALL | ( | x | ) |

Protected NVRTC call.

| func | Expression to call. |

It checks for NVRTC errors after invocation of the expression.

| #define QUOTE | ( | x | ) | #x |

Macros/inlines to assist CLion to parse Cuda files (*.cu, *.cuh)

| #define QUOTEVALUE | ( | x | ) | QUOTE(x) |

| #define STATIC_ASSERT_CUDA_VERSION_GE | ( | min_version | ) |

|

inline |

Determine major version number of the gpu's cuda compute architecture.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Determine minor version number of the gpu's cuda compute architecture.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Return an attribute GPU device_id.

| device_id | The device index of the cuda-capable gpu of interest. |

| cached_values | An array of attributes for already-looked-up GPUs. |

| attr | The attribute, by number. |

| attr_name | A string representation of the attribute, for error messages. |

|

inline |

Returns global policy for TensorCore algo use.

|

inline |

Returns global policy for TensorCore implicit type casting.

|

inline |

Return the shared memory size in bytes of each of the GPU's streaming multiprocessors.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Return the number of streaming multiprocessors of GPU device_id.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Return the integer SM architecture (e.g. Volta = 70).

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Return whether the GPU device_id supports cooperative-group kernel launching.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Determine whether a cuda-capable gpu's architecture supports float16 math. Assume not if device_id is negative.

| device_id | The device index of the cuda-capable gpu of interest. |

|

inline |

Determine whether a cuda-capable gpu's architecture supports Tensor Core math. Assume not if device_id is negative.

| device_id | The device index of the cuda-capable gpu of interest. |

| constexpr size_t kMaxNumGpus = 64 |

Maximum number of GPUs.

1.8.11

1.8.11