|

mxnet

|

|

mxnet

|

implementation of CPU host code More...

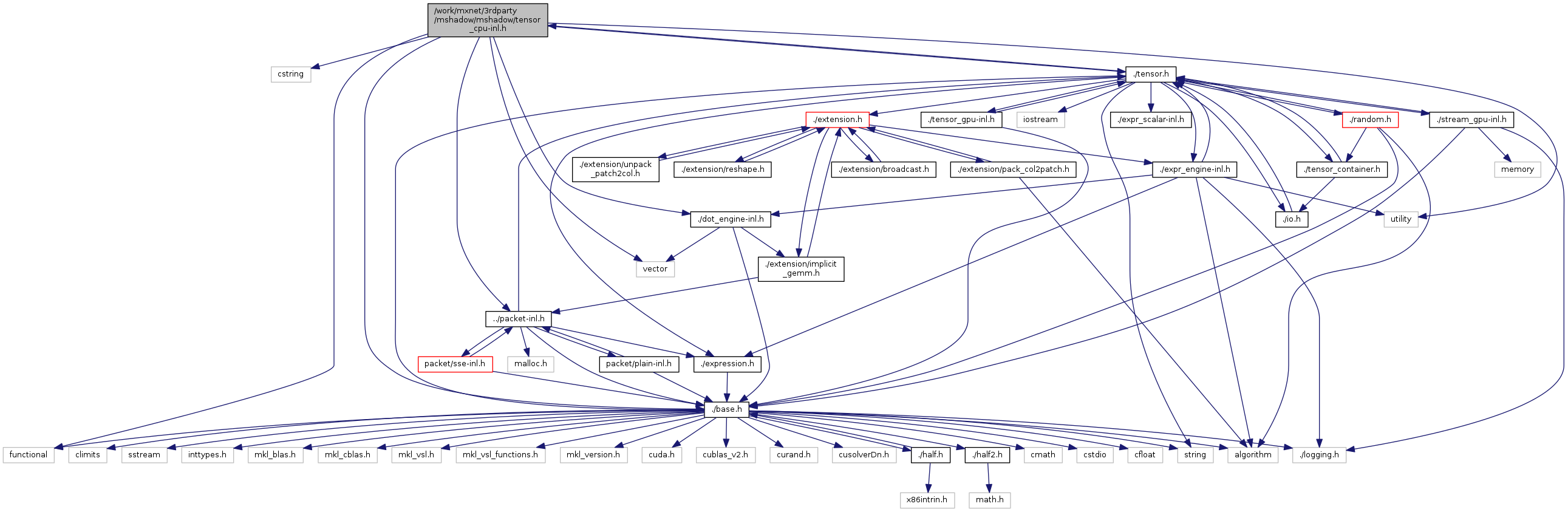

#include <cstring>#include <functional>#include <utility>#include <vector>#include "./base.h"#include "./tensor.h"#include "./packet-inl.h"#include "./dot_engine-inl.h"

Go to the source code of this file.

Classes | |

| struct | mshadow::MapExpCPUEngine< pass_check, Saver, R, dim, DType, E, etype > |

| struct | mshadow::MapExpCPUEngine< true, SV, Tensor< cpu, dim, DType >, dim, DType, E, etype > |

Namespaces | |

| mshadow | |

| namespace for mshadow | |

Functions | |

| template<> | |

| void | mshadow::InitTensorEngine< cpu > (int dev_id) |

| template<> | |

| void | mshadow::ShutdownTensorEngine< cpu > (void) |

| template<> | |

| void | mshadow::SetDevice< cpu > (int devid) |

| template<> | |

| Stream< cpu > * | mshadow::NewStream< cpu > (bool create_blas_handle, bool create_dnn_handle, int dev_id) |

| template<> | |

| void | mshadow::DeleteStream< cpu > (Stream< cpu > *stream) |

| template<int ndim> | |

| std::ostream & | mshadow::operator<< (std::ostream &os, const Shape< ndim > &shape) |

| allow string printing of the shape More... | |

| template<typename xpu > | |

| void * | mshadow::AllocHost_ (size_t size) |

| template<typename xpu > | |

| void | mshadow::FreeHost_ (void *dptr) |

| template<> | |

| void * | mshadow::AllocHost_< cpu > (size_t size) |

| template<> | |

| void | mshadow::FreeHost_< cpu > (void *dptr) |

| template<typename xpu , int dim, typename DType > | |

| void | mshadow::AllocHost (Tensor< cpu, dim, DType > *obj) |

| template<typename xpu , int dim, typename DType > | |

| void | mshadow::FreeHost (Tensor< cpu, dim, DType > *obj) |

| template<int dim, typename DType > | |

| void | mshadow::AllocSpace (Tensor< cpu, dim, DType > *obj, bool pad=MSHADOW_ALLOC_PAD) |

| CPU/CPU: allocate space for CTensor, according to the shape in the obj this function is responsible to set the stride_ in each obj.shape. More... | |

| template<typename Device , typename DType , int dim> | |

| Tensor< Device, dim, DType > | mshadow::NewTensor (const Shape< dim > &shape, DType initv, bool pad=MSHADOW_ALLOC_PAD, Stream< Device > *stream=NULL) |

| CPU/GPU: short cut to allocate and initialize a Tensor. More... | |

| template<int dim, typename DType > | |

| void | mshadow::FreeSpace (Tensor< cpu, dim, DType > *obj) |

| CPU/GPU: free the space of tensor, will set obj.dptr to NULL. More... | |

| template<int dim, typename DType > | |

| void | mshadow::Copy (Tensor< cpu, dim, DType > dst, const Tensor< cpu, dim, DType > &src, Stream< cpu > *stream=NULL) |

| copy data from one tensor to another, with same shape More... | |

| template<typename Saver , typename R , int dim, typename DType , typename E > | |

| void | mshadow::MapPlan (TRValue< R, cpu, dim, DType > *dst, const expr::Plan< E, DType > &plan) |

| template<typename Saver , typename R , int dim, typename DType , typename E , int etype> | |

| void | mshadow::MapExp (TRValue< R, cpu, dim, DType > *dst, const expr::Exp< E, DType, etype > &exp) |

| CPU/GPU: map a expression to a tensor, this function calls MapPlan. More... | |

| template<typename Saver , typename Reducer , typename R , typename DType , typename E , int etype> | |

| void | mshadow::MapReduceKeepLowest (TRValue< R, cpu, 1, DType > *dst, const expr::Exp< E, DType, etype > &exp, DType scale=1) |

| CPU/GPU: map a expression, do reduction to 1D Tensor in lowest dimension (dimension 0) More... | |

| template<typename Saver , typename Reducer , int dimkeep, typename R , typename DType , typename E , int etype> | |

| void | mshadow::MapReduceKeepHighDim (TRValue< R, cpu, 1, DType > *dst, const expr::Exp< E, DType, etype > &exp, DType scale=1) |

| CPU/GPU: map a expression, do reduction to 1D Tensor in third dimension (dimension 2) More... | |

| template<typename DType > | |

| void | mshadow::Softmax (Tensor< cpu, 1, DType > dst, const Tensor< cpu, 1, DType > &energy) |

| template<typename DType > | |

| void | mshadow::SoftmaxGrad (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 2, DType > &src, const Tensor< cpu, 1, DType > &label) |

| CPU/GPU: softmax gradient. More... | |

| template<typename DType > | |

| void | mshadow::SmoothSoftmaxGrad (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 2, DType > &src, const Tensor< cpu, 1, DType > &label, const float alpha) |

| template<typename DType > | |

| void | mshadow::SoftmaxGrad (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 2, DType > &src, const Tensor< cpu, 1, DType > &label, const DType &ignore_label) |

| template<typename DType > | |

| void | mshadow::SmoothSoftmaxGrad (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 2, DType > &src, const Tensor< cpu, 1, DType > &label, const DType &ignore_label, const float alpha) |

| template<typename DType > | |

| void | mshadow::SoftmaxGrad (Tensor< cpu, 3, DType > dst, const Tensor< cpu, 3, DType > &src, const Tensor< cpu, 2, DType > &label) |

| template<typename DType > | |

| void | mshadow::SmoothSoftmaxGrad (Tensor< cpu, 3, DType > dst, const Tensor< cpu, 3, DType > &src, const Tensor< cpu, 2, DType > &label, const float alpha) |

| template<typename DType > | |

| void | mshadow::SoftmaxGrad (Tensor< cpu, 3, DType > dst, const Tensor< cpu, 3, DType > &src, const Tensor< cpu, 2, DType > &label, const DType &ignore_label) |

| template<typename DType > | |

| void | mshadow::SmoothSoftmaxGrad (Tensor< cpu, 3, DType > dst, const Tensor< cpu, 3, DType > &src, const Tensor< cpu, 2, DType > &label, const DType &ignore_label, const float alpha) |

| template<typename DType > | |

| void | mshadow::Softmax (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 2, DType > &energy) |

| CPU/GPU: normalize softmax: dst[i][j] = exp(energy[i][j]) /(sum_j exp(energy[i][j])) More... | |

| template<typename DType > | |

| void | mshadow::Softmax (Tensor< cpu, 3, DType > dst, const Tensor< cpu, 3, DType > &energy) |

| template<bool clip = true, typename IndexType , typename DType > | |

| void | mshadow::AddTakeGrad (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 1, IndexType > &index, const Tensor< cpu, 2, DType > &src) |

| CPU/GPU: Gradient accumulate of embedding matrix. dst[index[i]] += src[i] Called when the featuredim of src is much larger than the batchsize. More... | |

| template<typename IndexType , typename DType > | |

| void | mshadow::AddTakeGradLargeBatch (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 1, IndexType > &sorted, const Tensor< cpu, 1, IndexType > &index, const Tensor< cpu, 2, DType > &src) |

| CPU/GPU: Gradient accumulate of embedding matrix. dst[sorted[i]] += src[index[i]] Called when the batchsize of src is larger than the featuredim. More... | |

| template<typename IndexType , typename DType > | |

| void | mshadow::IndexFill (Tensor< cpu, 2, DType > dst, const Tensor< cpu, 1, IndexType > &index, const Tensor< cpu, 2, DType > &src) |

| CPU/GPU: Fill the values of the destination matrix to specific rows in the source matrix. dst[index[i]] = src[i] Will use atomicAdd in the inner implementation and the result may not be deterministic. More... | |

| template<typename KDType , typename VDType > | |

| void | mshadow::SortByKey (Tensor< cpu, 1, KDType > keys, Tensor< cpu, 1, VDType > values, bool is_ascend=true) |

| CPU/GPU: Sort key-value pairs stored in separate places. (Stable sort is performed!) More... | |

| template<typename Device , typename VDType , typename SDType > | |

| void | mshadow::VectorizedSort (Tensor< Device, 1, VDType > values, Tensor< Device, 1, SDType > segments) |

| CPU/GPU: Sort the keys within each segment. (Stable sort is performed!) Segments is defined as an ascending ordered vector like [0, 0, 0, 1, 1, 2, 3, 3, 3,...] We sort separately the keys labeled by 0 and 1, 2, 3, etc. Currently only supports sorting in ascending order !! More... | |

| template<typename Device , typename DType > | |

| void | mshadow::VectorDot (Tensor< Device, 1, DType > dst, const Tensor< Device, 1, DType > &lhs, const Tensor< Device, 1, DType > &rhs) |

| CPU/GPU: 1 dimension vector dot. More... | |

| template<bool transpose_left, bool transpose_right, typename Device , typename DType > | |

| void | mshadow::BatchGEMM (Tensor< Device, 3, DType > dst, const Tensor< Device, 3, DType > &lhs, const Tensor< Device, 3, DType > &rhs, DType alpha, DType beta, Tensor< Device, 1, DType * > workspace) |

| CPU/GPU: dst = alpha * op(lhs) op(rhs) + beta * dst. More... | |

implementation of CPU host code

Copyright (c) 2014 by Contributors

1.8.11

1.8.11